“AI” is now a boardroom staple, but the term covers very different toolkits. Two categories – conversational AI (CAI) and generative AI (GenAI) – are often conflated in headlines and vendor decks, yet they solve different business problems, draw on distinct technical foundations, and come with separate risk profiles.

With GenAI attracting a record $33.9 billion in private investment last year and CAI revenue projected to exceed $150 billion by 2033, leaders need a clear, side-by-side view to guide budgets, talent, and governance.

Why This Comparison Matters in 2025

Executives are flooded with vendor decks promising “AI-powered everything,” yet very different technologies hide behind that two-letter label. In most boardrooms, the discussion quickly becomes a conversational vs generative AI showdown:

- Conversational AI: chatbots, voice IVR, virtual assistants – software meant to hold a back-and-forth with a real person powered by machine learning.

- Generative AI: large models that generate novel text, images, code, or audio on demand.

Investors are already voting with their wallets. According to PwC, CAI platforms generated $17 billion in global revenue last year, while GenAI startups soaked up $33.9 billion in venture capital. The inevitable question – “generative vs conversational AI: which returns value faster?” – now decides budgets, talent strategies, and even data-center build-outs.

Quick Conversational AI vs Generative AI Definitions Overview

| Term | Core Definition | Deeper Context & Examples |

|---|---|---|

| Conversational AI | Systems that parse user intents and manage dialogue turns to complete a goal. | Think of the voice assistant routing your bank call, the WhatsApp bot confirming an airline seat, or a live agent-assist pane that whispers suggested replies.

Conversational AI focuses on goal-oriented exchange: it identifies the user’s intent, extracts key entities (dates, amounts, locations), and maintains a dialogue state so follow-up questions feel coherent. Most platforms combine intent classifiers, slot-filling logic, and response templates (often fine-tuned small language models), so every turn steers the user toward resolution—booking, payment, password reset. Speed, accuracy, and compliance trump creativity; the system must remain terse, factual, and auditable. |

| Generative AI | Models that learn statistical patterns and then create fresh content (words, pictures, code, or sound). | These engines shine when novelty matters. A marketing team feeds a product brief to an LLM that drafts five ad variants; a game studio prompts a diffusion model to render concept art; a fintech startup lets coders auto-generate boilerplate APIs.

Under the hood, foundation models with billions of parameters sample likely tokens or pixels, but clever prompting, retrieval-augmented generation (RAG), and human feedback push outputs from “generic” to “brand-on-voice.” Hallucination risk and IP provenance become governance hot spots, yet the payoff—rapid ideation and multimodal creativity—keeps budgets flowing. |

Technical Stack Deep Dive

The moment the hype dies down and procurement starts asking, “Can we actually run this in production?”, the conversation shifts from brand-name models to plumbing. Underneath the glossy demos, conversational AI vs generative AI reveals two very different software kitchens. One is a disciplined diner line that turns out the same meal consistently; the other is an experimental test kitchen eager to improvise. Before we hit the comparison table, let’s set the stage.

Conversational artificial intelligence solutions grew up inside contact-center SLAs: Every millisecond of latency and every percentage point of intent-classification accuracy is contractually measured. Their architectures, therefore, emphasize predictability, traceability, and tight coupling to business systems (CRMs, payment gateways, ticketing platforms).

Generative AI tools, by contrast, evolved from research labs that valued new capabilities (poetic fluency, image realism, self-supervised learning) over strict determinism. They tolerate higher compute loads and lean on probabilistic sampling to produce novelty resembling real data, trained on large datasets, while also considering intellectual property concerns and the limitations of the AI model. Later, governance layers are bolted on to corral that creativity into something usable, including aspects of content creation, and legal inside the enterprise.

Core Architecture

| Layer | Conversational AI | Generative AI |

|---|---|---|

| Model heart | Lean intent classifiers, domain-tuned small language models (SLMs), and dialogue-state trackers that map each turn to a business goal. Many stacks embed compact Transformer variants (e.g., DistilGPT) for speed, then fall back to larger LLMs only when confidence drops. |

Giant foundation models like GPT-4-o, Claude 4, Gemini 2, or multimodal diffusion–Transformer hybrids that handle text-to-image, text-to-code, or text-to-speech. These models rely on billions of parameters and server-class GPUs or TPUs. |

| Memory | A short-term context window (usually the last 5-20 user turns) plus live retrieval from CRMs, knowledge bases, or product catalogs so the bot never hallucinates SKU prices or policy numbers. | External vector databases (FAISS, Pinecone, Weaviate) store long-range semantic embeddings that maintain stylistic consistency across a 3 000-word blog post or a multi-scene storyboard. |

| Reasoning loop | “Slot filling” and form logic dominate: the system asks for missing entities (“What date would you like to fly?”), checks them against validation rules, and branches along a finite-state graph. Rule engines or lightweight reinforcement learning tweak wording but rarely rewrite the flow. |

Stochastic sampling, explicit chain-of-thought prompting, and RAG grounding fetch authoritative snippets, which the LLM weaves into fluent prose. Instead of rigid branches, GenAI reasons in free form, guided by probability distributions and temperature settings. |

| Output guardrails | Deterministic policies: regex filters for PII, PCI masking, profanity blacklists, plus business-logic checks (“only refund if warranty valid”). The safest CAI stacks can pass ISO 27001 and HIPAA audits. |

Post-generation filters for toxicity, copyright matches, or disallowed topics; sometimes realtime watermarking or “AI-fact” citations. Because the core model is non-deterministic, multiple safety nets (policy tuning, RLHF, red-teaming) run in parallel. |

Data Pipelines

The data that powers each stack is just as revealing as the code:

Conversational AI ingestion loop

- Raw logs from chat, voice, and IVR sessions stream into a labeling platform.

- Annotators tag intents (“check-order-status”), entities (“order #12345”, “tomorrow”), and sentiment.

- Daily or weekly fine-tunes refresh the SLM so the bot adapts to slang, seasonality, or new product lines.

- Acoustic features (MFCCs, jitter, prosody) flow into separate ASR and TTS models for voice channels.

- Cleaned transcripts feed analytics dashboards measuring containment, CSAT, and average handle time.

Generative AI fueling station

- Terabytes of web corpora, Common Crawl, academic journals, code on GitHub, pass through de-duplication and toxicity scrubbing.

- Synthetic and proprietary datasets (e.g., purchase emails, internal wikis, design systems) are optionallyblended in via low-rank adaptation (LoRA) or parameter-efficient tuning.

- A massive distributed training job spins for days to weeks across thousands of GPUs, emitting checkpoints.

- Inference-time RAG pipelines index curated documents so the LLM can quote chapter and verse rather than hallucinate.

- Every generation is logged, scored by human or automated critics, and recycled as reinforcement-learning feedback.

In short, CAI consumes millions of precisely labeled utterances and acoustic cues, whereas GenAI devours oceans of semi-structured or unstructured content (text, images, code, and captions) to master the latent space of human expression. One pipeline resembles a well-kept assembly line; the other looks like a vast library feeding an improvisational playwright.

Generative AI vs Conversational AI Capabilities at a Glance

When non-technical stakeholders ask “So what can each kind of AI actually do?” they are usually probing for five things:

- Scope of outputs – words, images, actions, or a mix.

- Latency tolerance – sub-second back-and-forth versus “give it a minute to think.”

- Reliability under guardrails – will it obey policy every single time?

- Need for tool orchestration – does the model finish the job on its own or must it call APIs?

- Head-room for novelty – can it surprise and delight, or must it stay on script?

Those questions map neatly onto a richer feature grid than the quick-hit table in the first draft. Doubling the surface area gives readers a sharper sense of where conversational AI vs generative AI shine, and where they hitchhike on each other’s strengths.

| Capability | Conversational AI | Generative AI |

|---|---|---|

| Real-time dialogue | ✔ Sub-second intent detection keeps voice or chat flows snappy. | ◑ Possible in lightweight chat UIs but stalls on multi-paragraph responses. |

| Long-form copywriting | ◔ Templated paragraphs and variable injection work, but tone can feel robotic. | ✔ 500-word blog posts, 100-slide decks, even legal clauses on demand. |

| Image / video output | ✘ Not natively supported; would require external GenAI service. | ✔ Text-to-image diffusion, video synthesis, 3-D object generation. |

| Structured task handling | ✔ Seamless booking, refunds, form fills; integrates with RPA and CRMs. | ◑ Needs “function-calling” or orchestration layers to hit enterprise APIs. |

| Creativity / variability | ◔ Low by design—clarity > surprise. | ✔ High; stochastic sampling introduces endless phrasing or visual styles. |

| Personalization on real-time data | ✔ Slot filling with CRM look-ups enables customer-specific offers. | ◑ Achievable via retrieval-augmented generation (RAG) but slower, costlier. |

| Multi-modal input understanding | ◔ Mostly text + voice; experimental image intent detection emerging. | ✔ Can ingest text, code, images, and audio in a single prompt. |

| Factual grounding / citation | ✔ Pulls from curated KB articles; low hallucination rate. | ◑ Needs RAG or external validators; hallucinations remain a risk. |

| Explainability & audit | ✔ Finite-state flows and intent logs make decisions traceable. | ◑ Token-level saliency maps help, but full reasoning chain stays opaque. |

| Privacy & compliance fit | ✔ Easier to sandbox PII; models are smaller, easier to host on-prem. | ◑ Large models often run in third-party clouds; redaction layers required. |

| Latency sensitivity | ✔ Tuned for ≤ 300 ms round-trip in voice IVR. | ◑ Optimized inference pipelines hit 1–2 s, but heavy prompts still spike. |

| Conversation memory span | ◔ Remembers recent turns plus explicit CRM context. | ✔ Long-context windows (128 k tokens +) keep track of multipage briefs. |

Generative vs Conversational AI Business Use Cases Showdown

Customer Ecperience Service

CAI in production. Telcos, airlines, and banks rely on conversational AI to keep support queues from melting down. Verizon’s new Gemini-powered assistant inside the My Verizon app now resolves upgrades, billing questions, and SIM swaps with 90 % accuracy, deflecting thousands of tickets that once hit live agents. Ticket-deflection specialists such as Forethought report that a well-trained bot can cut inbound volume by 20–30 % in under two months.

GenAI on the agent desktop. Zendesk’s call-center plug-in auto-generates post-call summaries and tags, freeing agents from note-taking and trimming wrap-up time by almost a minute per ticket. Microsoft’s Dynamics 365 Copilot goes a step further, drafting context-rich follow-up emails “in seconds,” a task that used to take agents several minutes.

The winning pattern is CAI up front, GenAI behind the curtain: the bot handles real-time dialogue and hand-offs while large language models generate human-sounding summaries, sentiment briefs, or escalation emails.

In other words, generative AI vs conversational AI is not a fork in the road but a relay race between speed and eloquence.

Marketing & Content

GenAI’s creative turbo-boost. Coca-Cola’s “Create Real Magic” campaign let digital artists remix the brand’s iconography with GPT-4 and DALL-E, collapsing weeks of agency iterations into days. Internal Bain analytics showed a 70 % reduction in creative-cycle time.

CAI’s conversion muscle. Sephora’s reservation chatbot drives shoppers from Instagram chat into in-store makeover bookings, posting an 11 % higher conversion rate than traditional web forms.

Marketers asking “conversational vs generative AI – where first?” usually land on a split investment: GenAI for brainstorming and asset generation at the top of the funnel, CAI chat widgets for lead capture and guided shopping at the bottom.

Healthcare

CAI for compliant triage. Babylon Health’s HIPAA-aligned chatbot screens symptoms, flags red-alerts to clinicians, and routes routine user queries autonomously, preserving scarce nurse time.

GenAI for plain-language care. UCSF Health reports that LLM-based copilots can rewrite discharge summaries into sixth-grade English, improving patient care and comprehension scores by double digits.

Predictive AI closes the loop. A recent EHR-integrated model predicts outpatient no-shows with 86 % accuracy, letting schedulers over-book intelligently and slash idle clinical minutes.

Together, these illustrate the full generative AI vs conversational AI vs predictive AI triangle: triage bots converse, LLMs translate, and forecasts optimize capacity.

eCommerce

CAI for post-purchase care. Fashion giant H&M’s website chatbot tracks orders, processes returns, and recommends sizes, reducing wait times and boosting CSAT across web and social channels.

GenAI for merchant efficiency. eBay’s award-winning “Magical Listing” tool writes product descriptions and polishes images, saving sellers minutes per SKU and earning the 2024 AI Breakthrough prize for “Best Overall Generative-AI Solution”. Shopify’s “Magic” suite offers similar instant copy-and-discount generation for small merchants, helping the platform outpace overall e-commerce growth.

Retailers increasingly layer demand-forecast models on top of these tools to guide dynamic pricing and stock replenishment, yet another case where predictive joins the generative vs conversational duo.

Conversational vs Generative AI Benefits & Limitations

Conversational AI Pros

- Targeted goal-completion. Contact-center platforms track hard KPIs such as intent-match accuracy, containment rate, and CSAT uplift, so business impact is provable rather than anecdotal.

- Low hallucination risk. Finite-state flows and retrieval from approved knowledge bases mean answers stay inside policy boundaries.

- Fine-grained analytics & compliance. Every user turn is logged with timestamps and sentiment, helping teams meet ISO 27001 or HIPAA audits.

- Lean compute footprint. A production IVR bot typically runs on CPUs or small GPUs, costing a fraction of LLM inference.

Conversational AI Cons

- Intent rigidity. When users stray outside trained intents, the bot reverts to fallback scripts, eroding experience quality.

- High design upkeep. Conversation designers must refresh utterance libraries as products, regulations, or slang evolve.

- Multilingual expansion is data-hungry. Each new language demands fresh labeled dialogs or transfer-learning passes; low-resource tongues remain under-served.

Generative AI Pros

- Boundless drafts and modalities. One prompt can yield blog copy, hero images, code stubs, or voice-overs, fueling rapid ideation across teams.

- Creative variability. Temperature tuning and style prompts let brands A/B-test dozens of variants in minutes, something CAI cannot match.

- Long-context reasoning. New 128 k-token windows keep multi-document coherence, ideal for research summaries or policy generation.

Generative AI Cons

- Hallucination remains a live hazard. Recent studies peg ungrounded error rates between 15 % and 20 % for mainstream LLMs, even higher in low-resource languages.

- IP & content ambiguity. Outputs may embed copyrighted phrases or look-alike imagery, requiring costly legal review.

- Bias amplification. Models inherit and can magnify societal stereotypes unless rigorously red-teamed.

- Heavy GPU bills. Cloud inference for GPT-class models runs about $0.06 per 1 k tokens, and self-hosted clusters can top $2 k per month per GPU instance.

- Energy & carbon footprint. Multi-billion-parameter training runs consume megawatt-hours, drawing regulatory scrutiny in the EU and U.S.

CAI excels where reliability, cost, and auditability dominate; GenAI wins where breadth, creativity, and multimodality drive value. Pairing them, rather than picking one, lets enterprises tap both precision and invention.

What are the main differences between conversational AI and generative AI?

Conversational AI focuses on understanding and responding to human language in real-time, often using predefined scripts, which enhance customer interactions and provide a competitive advantage in customer service. In contrast, generative AI creates new original content based on learned patterns. Essentially, conversational AI aims for interaction, while generative AI emphasizes creativity and content generation. Both have unique capabilities and play unique roles in AI technology.

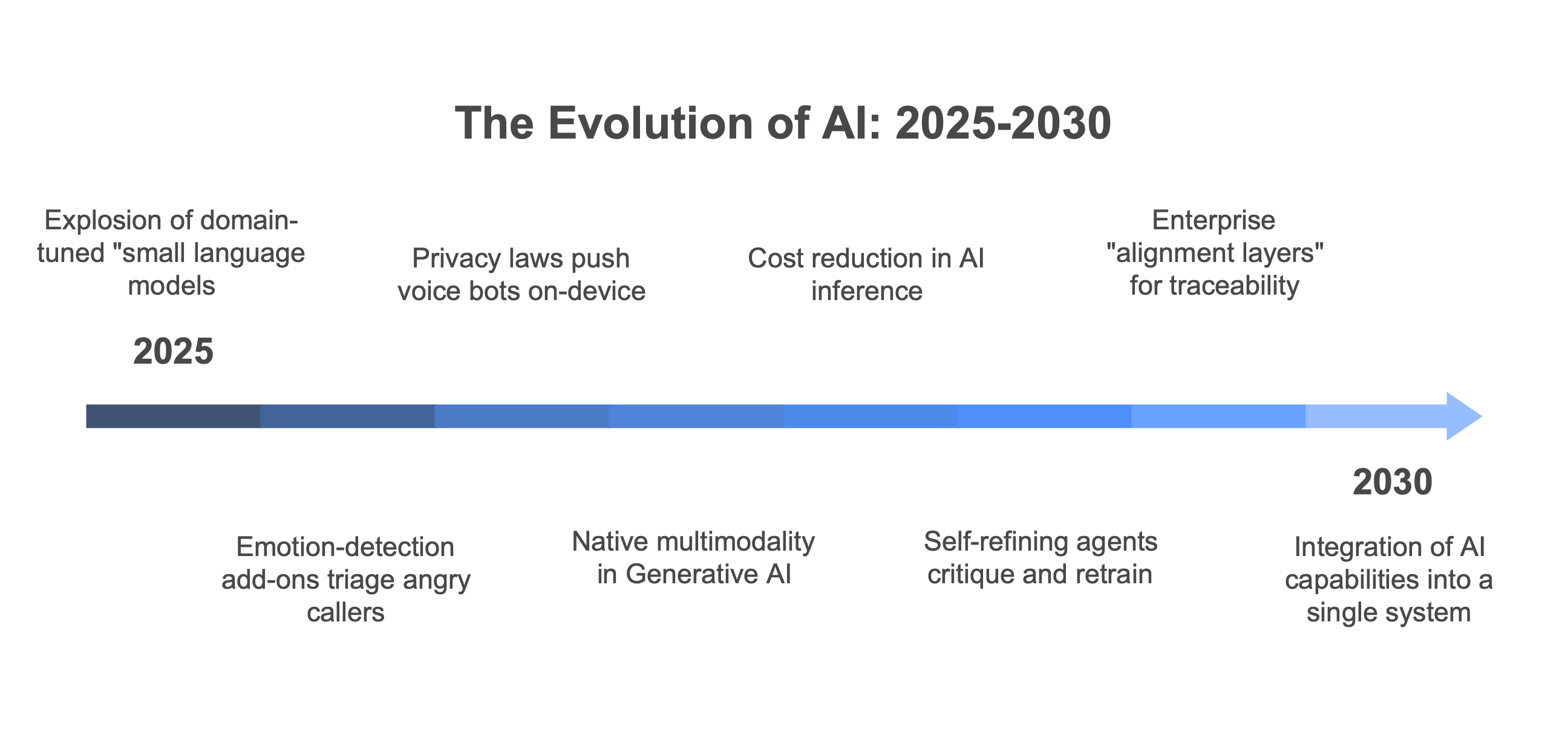

Generative AI vs Conversational AI vs Predictive AI Future Outlook (2025 – 2030)

Regarding conversational AI, we can expect an explosion of domain-tuned “small language models” (SLMs) that fit on a single edge TPU, plus federated-learning loops that let a retailer, a hospital, and a bank each refine the same base model locally without sharing raw transcripts. Emotion-detection add-ons will triage angry callers to live agents, and privacy laws will push more voice bots to run on-device inside cars, wearables, and smart TVs.

One of headline market trends for Generative AI is native multimodality: text ⭢ image ⭢ video ⭢ 3-D objects in one prompt chain. Under the hood, vendors will slash inference cost via sparsity pruning, Mixture-of-Experts routing, and liquid-tensor quantization, bringing “GPT-4-class” power to sub-10¢ queries. We’ll also see self-refining agentsthat critique and retrain themselves overnight, plus enterprise “alignment layers” that watermark every pixel or sentence for downstream traceability.

By 2030, the question will not be “generative vs conversational AI?” but “how do we braid conversational, generative, and predictive capabilities into a single governance and cost envelope, with effective risk management?” The most resilient firms will treat CAI, GenAI, and predictive stacks as mutually reinforcing layers (front-end empathy, mid-tier creativity, and back-end foresight), each with its own carbon budget, risk tier, and talent pool.

Final Word

Think of conversational AI as your brand’s voice – precise, always on, and audit-ready. Picture generative AI as the creative pen – drafting, illustrating, and coding at the speed of thought.

When these intelligences operate in concert, customers feel heard in the moment, enhancing customer engagement and customer satisfaction. Content teams iterate in real time, and executives steer the company with data-backed foresight regarding customer behavior. Mastering the interplay (conversational ai vs generative ai) is not just a technical upgrade; it’s the operating system for a business that can converse, create, and forecast at truly super-human scale.

![Conversational AI vs Generative AI – 360° Comparison [Banner] Conversational AI vs Generative AI – 360° Comparison [Banner]](https://cogniagent.ai/wp-content/uploads/2025/06/Conversational-AI-vs-Generative-AI-–-360°-Comparison-Banner.png)