Have you ever chatted with a robot and felt like you were talking to a real person? That’s the magic of conversational AI! It’s like getting a helping hand right when you need it. Thanks to natural language processing and machine learning, it can understand what you’re saying and respond in a way that feels personal and useful.

From customer service teams to product development, conversational AI is used across industries to improve customer service and satisfaction.

So, let’s explore conversational AI’s meaning, how it works, its applications, benefits, and challenges, as well as conversational AI examples, and see how different brands use conversational AI to deliver improved customer experience, convenience, and support.

What is conversational AI?

Conversational artificial intelligence (AI) is an advanced form that enables machines to engage in human-like interactive conversations with users. The technology understands and interprets human language to simulate natural conversation. It can learn from customer interactions over time to respond contextually.

Conversational AI systems are widely used in applications such as AI chatbots, voice assistants, and customer support platforms across digital and telecom channels. Here are some key statistics that illustrate its impact:

- The global conversational AI market was valued at $6.8 billion in 2021 and is projected to grow to $18.4 billion by 2026 at a CAGR of 22.6%. The market size is expected to reach $1.5 billion by 2028.

- Despite its prevalence, 63% of users are unaware that they use AI daily.

- A Gartner survey found that many companies have identified chatbots as their primary AI application, and nearly 70% of white-collar workers are expected to interact with conversational platforms daily by 2022.

- Since the pandemic, conversational agents’ interactions have increased by 250% across several industries.

- In 2022, 91% of adult voice assistant users used conversational AI technology on their smartphones.

- Among tech professionals worldwide, nearly 80% use virtual assistants for customer service.

These statistics highlight conversational AI’s growing adoption and impact across industries and consumer behavior.

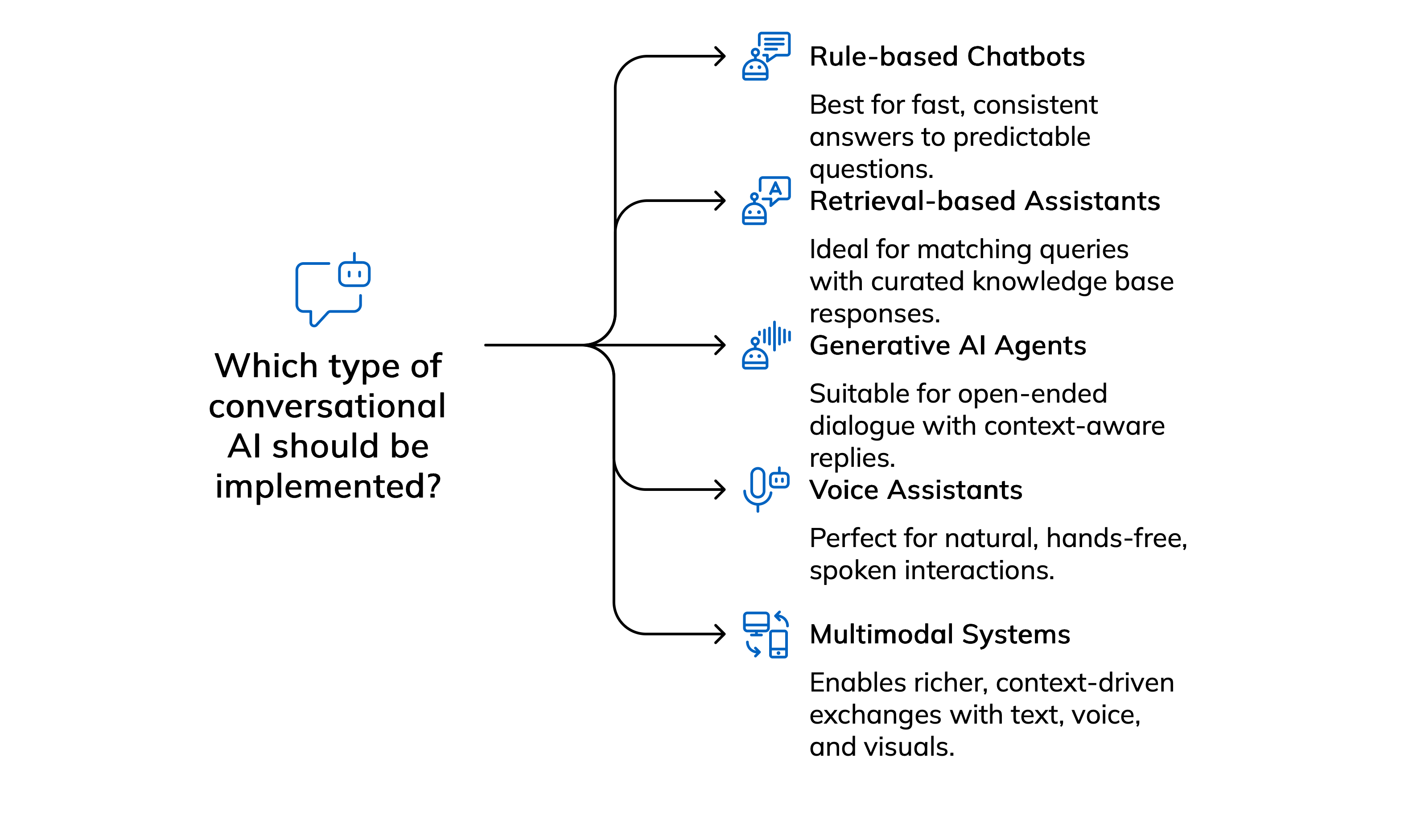

Types of conversational AI

Conversational AI comes in several distinct flavors, each optimized for different interaction styles and business goals. Below are the core types you’ll most often encounter:

- Rule-based chatbots – follow scripted decision trees to deliver fast, consistent answers to predictable questions.

- Retrieval-based assistants – use NLP to match user queries with the best-fitting response from a curated knowledge base.

- Generative AI agents rely on large language models to compose original, context-aware replies for open-ended dialogue.

- Voice assistants – combine speech recognition and synthesis for natural, hands-free, spoken interactions.

- Multimodal conversational systems – process and return text, voice, and visuals together, enabling richer, context-driven exchanges.

How does conversational AI work?

This smart tech mixes interactive tools with Natural Language Processing (NLP) and Machine Learning (ML), similar to Amazon Alexa, to create human-like conversations. It understands what people are saying using NLP and gets better over time with ML, so the interactions feel more personal and helpful.

Here is a breakdown of the steps in this process:

- Step #1: User’s input. Users enter their questions or queries into the conversational AI system by typing, speaking, or combining both.

- Step #2: Natural language processing. When users enter their queries or requests, the conversational AI’s NLP component analyzes the input data by breaking it down into sentences, phrases, and words. This allows it to understand the intent or purpose of the user’s request.

- Step #3: Machine learning. The machine learning-based system uses the NLP-processed data to generate a response tailored to the user’s needs. The machine learning component improves accuracy and performance by continuously learning from each interaction.

- Step #4: Response generation. Finally, the ML component generates a response, which can be in text, speech, or a combination of both. The user receives the response, completing the conversational cycle.

Conversational AI Examples

Conversational AI is significantly impacting various industries, transforming how businesses connect with people. From banking and healthcare to fast food and automotive, it’s helping companies deliver faster, more personalized service. Let’s look at a few industry examples to see how it works.

1. Banking – HSBC’s “Amy” virtual assistant

Use Case: Voice-based banking assistant

HSBC introduced “Amy” to help customers with everyday tasks – checking balances, resetting PINs, reporting lost cards, and more. The assistant provides 24/7 support, reducing hold times and improving access when live agents are unavailable.

2. Healthcare – “Eva” conversational AI agent by Cencora & Infinitus

Use Case: Insurance workflow automation & elder companionship

Cencora deployed Eva to handle insurance communications (benefit verifications and drug services), performing the equivalent of 100+ full-time roles and speeding up processing by 4×. Additionally, apps like Everfriends offer AI companionship to older adults, and early studies show decreased loneliness.

3. Automotive – Mercedes‑Benz AI assistant in CLA series

Use Case: In-car conversational AI assistant

Using Google Cloud’s automotive AI, Mercedes-Benz embedded an intelligent assistant in CLA models to handle conversational searches and help with navigation. They’re also building a generative AI-powered storefront assistant to streamline online car shopping.

4. Fast food – Bojangles’ “Bo‑Linda” drive‑thru AI

Use Case: Conversational AI ordering in quick-service restaurants

Bojangles teamed up with Hi Auto to deploy “Bo‑Linda” at drive-thrus, achieving about 95% accuracy in order-taking. This reduces staff stress and turnover, allowing employees to prioritize food prep and customer service.

5. Recruitment – “Tengai” robot interviewer by Furhat Robotics & TNG

Use Case: Bias-reducing job interview assistant

Tengai is a Furhat robot deployed by Swedish firm TNG for structured interviews. It interacts in a consistent, neutral manner, helping eliminate unconscious bias. The robot’s presence and performance have been featured in media reports highlighting its success and reduced recruiter bias.

Business values of implementing conversational AI

Lower service costs: US$ 11 billion saved each year

A Juniper–quoted data set shows that companies deploying chatbots across retail, banking, and healthcare now trim operating expenses by up to $11 billion annually by handling common queries without live agents. That figure alone explains why finance chiefs green-light conversational AI projects so quickly.

Massive time savings: ≈ 2.5 billion agent hours reclaimed

The same research finds chatbots free support teams from nearly 2.5 billion hours of routine interactions, time that can be reinvested in complex cases and proactive outreach. In other words, conversational AI doesn’t just cut costs – it hands companies an extra workforce’s worth of capacity every year.

Direct revenue growth: US $142 billion in retail spend by 2024

Juniper Research projects that shoppers will route $142 billion of purchases through chatbots (up from $2.8 billion in 2019), proving these assistants aren’t only for service – they’re a checkout lane always open. Retailers embracing conversational commerce capture incremental sales and richer first-party data in the same move.

Higher customer satisfaction & loyalty: 72 % demand “immediate” answers

Zendesk’s 2023 CX Trends shows 72 percent of customers want service instantly; meeting that bar with AI boosts retention because speed is now a loyalty driver. Brands that respond in real time see lower churn and stronger upsell rates than slower competitors.

Employee productivity lift: +14 % issues resolved per hour

A large-scale study of 5,179 support agents revealed that access to a generative-AI copilot increased tickets resolved per hour by 14 percent on average (and by 34 percent for new hires), reducing escalations and turnover. Conversational AI doesn’t replace staff – it makes them measurably more effective.

Data-driven personalization ROI: 5–15 % revenue lift, 10–30 % marketing ROI boost

McKinsey finds that AI-powered one-to-one messaging can lift revenues by 5–15 percent and raise marketing ROI by 10–30 percent through better targeting and timing. Those numbers turn personalization from a “nice-to-have” into one of the clearest business cases for conversational AI.

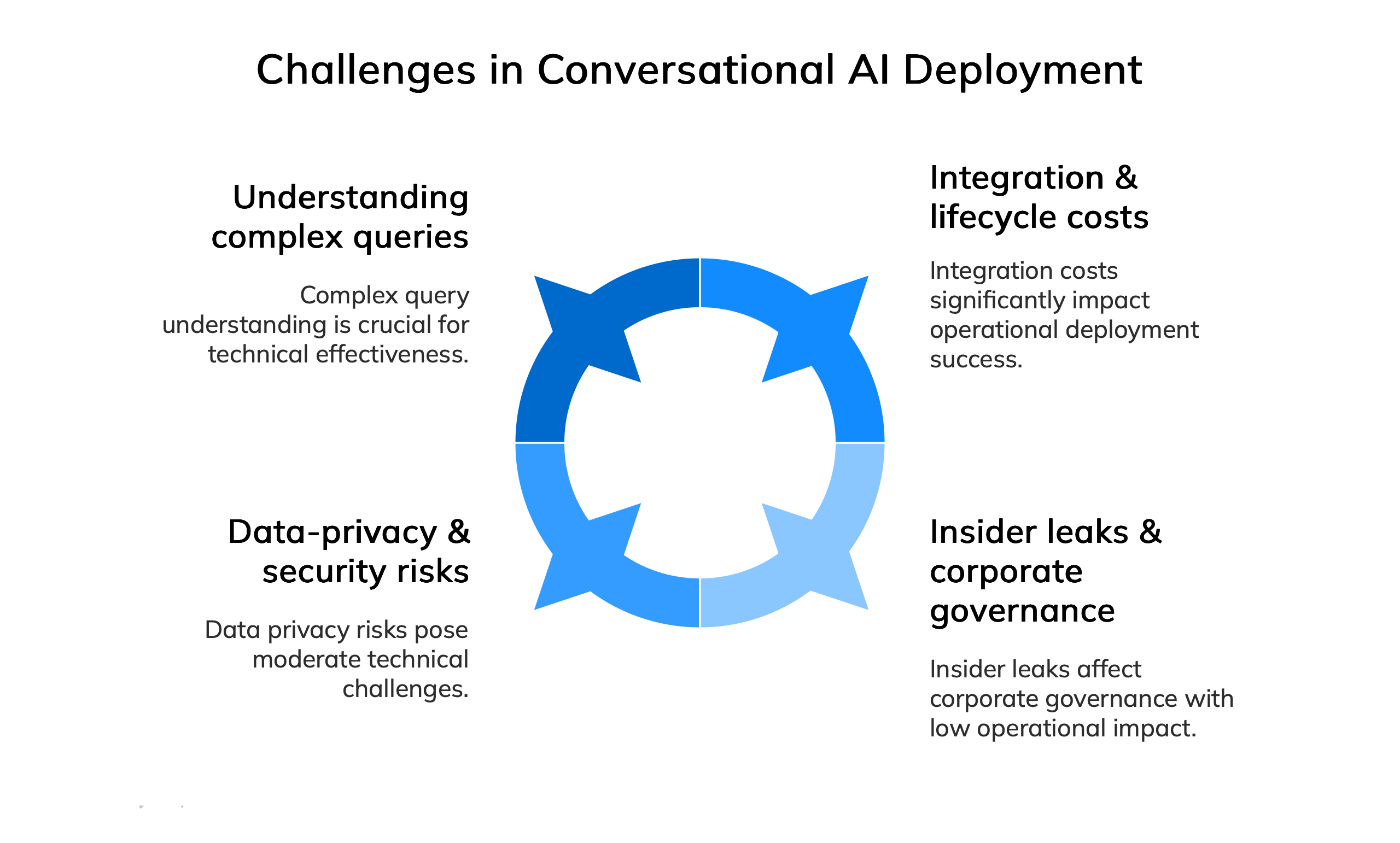

Challenges in using conversational AI

Conversational AI faces a spectrum of technical, ethical, and operational challenges that organizations must address before they can deploy these systems safely at scale.

1. Data-privacy & security risks

Conversational assistants and chatbots collect large volumes of personally identifiable or confidential business data, including sensitive information. If the weak storage, transmission, or retention policies, breaches can expose users’ conversations (e-mail addresses, payment details, medical notes, trade secrets, etc.).

Regulators are beginning to step in: in March 2023, Italy’s data-protection authority temporarily blocked ChatGPT nationwide until OpenAI implemented stronger safeguards and clearer privacy notices.

2. Understanding complex, multi-step queries

Natural language is inherently ambiguous. Long prompts that mix intents (“Rebook my flight, but only if the price difference is under €50, and then notify my manager”) still trip up even state-of-the-art models. Solving this requires dialogue management, domain ontologies, and real-time retrieval or tool-calling capabilities that are still maturing.

3. Bias, fairness & content safety

Models learn from internet-scale data that contains misogyny, racism, and other harmful stereotypes. Unless carefully filtered and continuously audited, the system may reproduce or even amplify those biases when it responds.

Microsoft’s Tay chatbot is a cautionary tale: within 24 hours of launch in 2016, online trolls coaxed it into posting racist, genocidal tweets, forcing Microsoft to shut it down.

4. Hallucinations & legal liability

Large language models sometimes generate confident but false statements (“hallucinations”). When those statements name real people or products, they can be defamatory.

U.S. radio host Mark Walters sued OpenAI in 2023 after ChatGPT told a journalist he had been sued for embezzlement −an entirely fictitious case. A Georgia judge dismissed the claim in May 2025, but the episode shows the litigation risk.

5. Integration & lifecycle costs

Embedding a conversational layer into existing customer-service stacks, knowledge bases, or IoT devices requires API gateways, logging, red-teaming, prompt versioning, and staff training. These hidden costs (latency, GPU availability, and ongoing model-tuning) often exceed initial proof-of-concept budgets.

6. Insider leaks & corporate governance

Employees may paste source code or unreleased product details into public chatbots for quick assistance, inadvertently disclosing trade secrets. After three engineers uploaded confidential code to ChatGPT in April 2023, Samsung banned all generative-AI tools on its corporate network.

How to build conversational AI?

The journey is iterative: start with a narrowly scoped assistant, release early, measure relentlessly, and expand in controlled cycles.

| Phase | What to Do | Key Pitfalls |

|---|---|---|

| Define the Business Use-Case & KPIs | Document the problem, target users, and success metrics (e.g., CSAT > 85 %, AHT ↓ 20 %). Prioritise a single high-value workflow (refunds, password reset, triage) to avoid “boil-the-ocean” scope. | Fuzzy goals make later evaluation impossible. |

| Map Compliance & Risk Constraints | List channel rules (GDPR, HIPAA, SOC 2), child-safety requirements, latency budgets, and allowed data-retention windows. | Discovering legal blockers after launch delays projects by months. |

| Choose an Architecture | Options range from classical NLU + dialog-manager stacks (e.g., Rasa stories & rules) to LLM-first pipelines that add Retrieval-Augmented Generation (RAG) for grounding. RAG dramatically lowers hallucinations by injecting real documents at inference time. | Jumping straight to an LLM without retrieval leads to fact-free answers and brand risk. |

| Collect & Label Data | Mine chat logs, FAQs, and call-center transcriptions. Cluster similar utterances, then label intents (“track-order”), entities (“order_id”)—even LLM fine-tuning benefits from a high-quality, task-specific corpus. | Over-reliance on synthetic data can bake in bias or outdated facts. |

| Train or Fine-Tune the Core Models | For local control, train Rasa NLU components or fine-tune an open LLM (e.g., Llama 3) with LoRA. For cloud speed, use managed APIs (OpenAI Function Calling, Google Gemini). Validate with cross-validation and out-of-distribution tests. | Focusing only on top-1 intent accuracy while neglecting entity extraction causes silent failures. |

| Design Dialogue Management | Implement state tracking, slots, and guardrails (“Do not give medical diagnosis”). Story-driven frameworks, finite-state machines, or LLM policy models can orchestrate turns. Always include a human-handoff escape hatch. | Glossing over unhappy paths (interruptions, profanity) torpedoes CX in production. |

| Integrate Tools & Back-End APIs | Connect order systems, CRMs, and payment gateways through secure function calls. Newer orchestration layers (LangChain, Microsoft AutoGen) automatically route user intents to the right tool. | Leaking credentials in prompts or logs is a real incident class. |

| Rigorous Evaluation & Red-Teaming | Track intent recall, factuality, toxicity, and latency. Use adversarial prompts and demographic stress tests. Internal “breakathons” mirror public failures like Microsoft Tay. | Shipping with only happy-path unit tests invites reputational damage. |

| Deploy, Scale & Optimise | Containerise the service (Kubernetes, serverless), add autoscaling GPU/CPU pools, and embed observability hooks. Amazon’s 2025 Alexa+ rebuild used generative AI even to grade responses during RL fine-tuning, pointing to the future of self-optimising voice stacks. | Ignoring GPU availability during traffic spikes leads to time-outs and SLA breaches. |

| Monitor & Continuously Improve | Collect conversation analytics, user feedback, and error logs. Retrain weekly or via online learning; rotate prompt versions; review sampled dialogues for bias drift. Automate regression tests before each rollout. | “Fire-and-forget” mentality: models become stale in weeks as policies, products, or language shift. |

Sounds pretty tricky, right? 🤪 That’s why we’ve created CogniAgent – a code conversational AI builder to help you create your customized AI agent.

How can CogniAgent help you build your conversational AI agent?

- Blueprint in minutes – Drag-and-drop canvas and industry-specific templates (Sales, Marketing, Support, Operations, Research) let you set goals, triggers, and KPIs without code to scope a working agent on day one.

- Instant knowledge injection – Upload PDFs, videos, websites, or spreadsheets; CogniAgent vectors everything automatically and links it to your bot with Retrieval-Augmented Generation. Continuous sync keeps knowledge current—no manual retraining.

- Emotion-aware voice and multichannel delivery – Built-in ASR, neural TTS, and real-time sentiment detection adjust tone, pace, and persona on the fly. Deploy the same agent to one dashboard via phone, chat, email, and social channels.

- Domain-specific automation blocks – Pre-built actions like “Create Zendesk Ticket,” “Fetch Invoice,” and “Check PTO Balance,” plus one-click connectors to CRMs, ERPs, REST, and SQL endpoints, remove glue code and power multi-step workflows.

- Effortless, continuous learning – Supervisor edits become fine-tuning data automatically, while active learning flags uncertain answers. Your agent improves as your team works – no separate annotation projects are needed.

- Guardrails and compliance by default – PII masking, moderation, and a visual policy editor satisfy GDPR, HIPAA, and SOC 2. Risk teams can add “no-go” rules (e.g., no medical advice) without touching code.

- One-click scale with clear ROI – Container deployments (Docker/K8s) autoscale from CPU to GPU, and a single dashboard tracks latency, CSAT, containment, and cost. Customers typically see 35 % higher efficiency and 40 % lower service costs—all for $0.15 per voice minute.

- Continuous improvement loop – Conversation analytics surface intent gaps and sentiment trends; scheduled retrain push fixes live with zero downtime, and version control keeps every prompt, workflow, and data snapshot audit-ready.

Final Thought

Ultimately, answering “What is conversational AI?” shows that it has become a mission-critical layer shaping every interaction customers, employees, and partners have with your brand. By blending natural language understanding with real-time data and automation, businesses unlock faster service, reduce the need for human intervention, gain deeper insights, and make measurable gains in efficiency and revenue.

Yet the real promise lies ahead: every conversation captured today becomes training fuel for an even more competent agent tomorrow, creating a compounding advantage for early adopters. Tools like CogniAgent lower the barrier to entry, letting teams focus on value rather than plumbing and compliance headaches.

As regulations tighten and customer expectations soar, the winners will be those who turn AI conversations into sustained relationships and continuous learning loops. In short, the question is no longer whether you should embrace conversational AI but how quickly you can put it to work.

AI Conversational FAQ

What makes AI conversational?

Conversational AI combines natural-language understanding (to parse intent and entities), dialogue management (to track context and decide the following action), and natural-language generation or speech recognition to craft human-like responses.

These components let the system engage in multi-turn exchanges that feel coherent and context-aware rather than one-off command processing. Continuous learning from user interactions refines its language model and behavior, making each conversation smarter.

What is the best conversational AI?

There’s no single “best” conversational AI software for every scenario – benchmarks as of mid-2025 put OpenAI’s GPT-4o at the top for general reasoning and multilingual dialogue, with Claude 3 Opus and Google Gemini 1.5 Pro close behind.

For enterprises needing complete data control or extreme customization, open-weight models such as Llama 3-70B or Mistral-MoE can outperform larger proprietary systems once fine-tuned on domain-specific data.

Other low-code conversational AI builders include Google Dialogflow CX, Microsoft Copilot Studio (formerly Power Virtual Agents), CogniAgent, and Botpress Cloud.

Ultimately, the best choice is the model that best meets your particular needs in accuracy, latency, cost, and governance rather than any leaderboard champion.

Is ChatGPT a conversational AI?

Yes. ChatGPT is a conversational AI. It uses large language models to understand user input, keep track of context across turns, and generate coherent, human-like replies. Because of this multi-turn dialogue capability, it meets the core definition of conversational AI systems.

![What is Conversational AI Examples, Use Cases, Pros & Cons [Banner] What is Conversational AI Examples, Use Cases, Pros & Cons [Banner]](https://cogniagent.ai/wp-content/uploads/2025/06/What-is-Conversational-AI-Examples-Use-Cases-Pros-Cons-Banner.png)